Big Data business intelligence entails new dimensions in the volume, velocity, and variety of data available to an organization. To create...

Big Data business intelligence entails new dimensions in the volume, velocity, and variety of data available to an organization. To create value from Big Data, intelligent data management is needed. Big Data management (BDM) is the process of value creation using Big Data. Business enterprises are forced to adapt and to implement Big Data strategies, even though they do not always know where to start. To address this issue, a BDM reference model can provide a frame of orientation to initiate new Big Data projects.

Image Credit: Pixabay

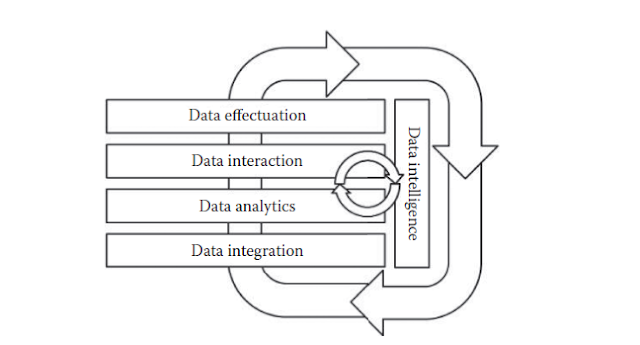

The offered BDMcube model shifts from an epistemic view of a cognitive system to a management view in a layer-based reference model. Kaufmann (2016) reported that the offered model “can be seen as a metamodel, where specific BDM models for a company or research process represent specific instances implementing certain aspects of the five layers.” BDMcube is a metamodel, or a model of models. It can be substantiated by deriving specific BDM models from it. Thus, it can be used for the classification and enhancement of existing BDM strategies, and it can provide a frame of reference, a creativity tool, and a project management tool to create, derive, or track new Big Data projects.

BDMcube is based on five layers that serve as a frame of reference for the implementation, operation, and optimization of BDM (Kaufmann 2016). Each of the five layers is described in detail here.

Data Integration defines the collection and combination of data from different sources into a single platform. The layer handles the involved database systems and the interfaces among all data sources with special attention to the system scalability and the common characteristics of Big Data, like the volume, velocity, and variety of data (sources).

Data Analytics describes the transformation of raw data into information via involvement of analytical processes and tools. Therefore, Big Data and analytical and machine learning systems have to operate within a scalable, parallel computing architecture.

Data Interaction is a layer which deals with analytical requirements of users and the results of the data analysis in order to create new organizational knowledge. Furthermore, Kaufmann (2016) reported that “it is important to note that data analysis results are in fact nothing but more data unless users interact with them.”

Data Effectuation describes the usage of data analytics results to create added values to products, services, and the operation of organizations.

Data Intelligence defines the task of knowledge management and knowledge engineering over the whole data life cycle, in order to deal with the ability of the organization to acquire new knowledge and skills. Furthermore, the layer offers a cross-functional, knowledge-driven approach to operate with the knowledge assets which are deployed, distributed, and utilized over all layers of Big Data management.

The following two models provide specific instances of BDMcube utilization in the areas of data visualization and data mining. Each of these models reflects the five aspects of BDMcube in its respective domain of application.

Deduced from these perspectives and intentions, there are different use cases and related user stereotypes that can be identified for performing Big Data analyses collaboratively within an organization. Users with the highest contextual level, e.g., managers of different hierarchy levels of such organizations, need to interact with visual analysis results for their decision-making processes. On the other hand, users with low contextual levels, like system owners or administrators, need to interact directly with data sources, data streams, or data tables for operating, customizing, or manipulating the systems. Nevertheless, user stereotypes with lower contextual levels are interested in visualization techniques as well, in case these techniques are focused on their lower contextual levels.

Finally, there are user stereotype perspectives in the middle of the excesses, representing the connection between user stereotypes with low or high contextual levels. Given the consequences of these various perspectives and contextual levels, it is important to provide the different user stereotypes with a context-aware system for their individual use cases: “The ‘right’ information, at the ‘right’ time, in the ‘right’ place, in the ‘right’ way, to the ‘right’ person” (Fischer 2012). One could add to this quotation “with the right competences,” or perhaps “with the right user empowerment.”

In this model, arrows lead from raw data to visual data presentation of the raw data within a cognitive, efficient, IVIS based on a visual structure and its rendering of a view that is easy for humans to perceive and interact with. The arrows in this model indicate a series of data transformations, whereas each arrow might indicate multiple chained transformations. Moreover, additional arrows from the human at the right into the transformations themselves indicate the adjustment of these transformations by user-operated controls supporting the human–computer interaction (Kuhlen 2004). Data transformations map raw data, such as text data, processing information (database tables, emails, feeds, and sensor data into data tables) which define the data with relational descriptions and extended metadata (Beath et al. 2012, Freiknecht 2014). Visual mappings transform data tables into visual structures that combine spatial substrates, marks, and graphical properties. Finally, the capability to view transformations creates views of the visual structures by specifying graphical parameters, such as position, scaling, and clipping (Kuhlen 2004). As Kuhlen (2004) summarized this issue, “Although raw data can be visualized directly, data tables are an important step when the data are abstract, without a direct spatial component.” Therefore, Card et al. (1999) defined the mapping of a data table to a visual structure, i.e., a visual mapping, as the core of a reference model, as it translates the mathematical relations within data tables to graphical properties within visual structures.

According to Card et al. (1999), arrows which indicate a series of (multiple) data transformations lead from raw data to data presentation to humans. However, instead of collecting raw data from a single data source, multiple data sources can be connected, integrated by means of mediator architectures, and in this way globally managed in data collections inside the data collection, management, and curation layer. The first transformation, which is located in the analytics layer of the underlying BDM model, maps the data from the connected data sources into data structures, which represent the first stage in the interaction and perception layer. The generic term “data structures” also includes the use of modern Big Data storage technologies (e.g., NoSQL, RDBMS, HDFS), instead of using only data tables with relational schemata. The following steps are visual mappings, which transform data tables into visual structures, and view transformations, which create views of the visual structures by specifying graphical parameters such as position, scaling, and clipping; these steps do not differ from the original IVIS reference model. As a consequence, interacting only with analysis results leads not to added value for the optimization of research results or business objectives, for example. Furthermore, no process steps are currently located within the insight and effectuation layer, because added values from this layer are generated instead from knowledge, which is a “function of a particular perspective” (Nonaka and Takeuchi 1995) and is generated within this layer by combining the analysis results with existing knowledge.

The major adaptations are located between the cross-functional knowledge-based support layer and the corresponding layers above. As a consequence, from the various perspectives and contextual levels of Big Data analysis and management user stereotypes, additional arrows lead from the human users on the right to multiple views. These arrows illustrate the interactions between user stereotypes with single-process stages and the adjustments of the respective transformations by user-operated controls to provide the right information, at the right time, in the right place, in the right way to the right person (Fischer 2012), within a context-aware and user-empowering system for individual use

cases. Finally, circulation around all whole layers clarifies that IVIS4BigData is not solely a one-time process, because the results can be used as the input for a new process circulation.

CRISP4BigData is an enhancement of the classical cross-industry standard process for data mining (CRISP-DM), which was developed by the CRISP-DM Consortium (comprising DaimlerChrysler [later Daimler-Benz], SPSS [later ISL], NCR Systems Engineering Copenhagen, and OHRA Verzekeringen en Bank Groep B.V.) with the target of handling the complexity of data-mining projects (Chapman et al. 2000).

The CRISP4BigData reference model is based on Kaufmann’s five sections of Big Data management, which includes (i) data collection, management, and curation, (ii) analytics, (iii) interaction and perception, (iv) insight and effectuation, and (v) knowledge based support, along with the standard four-layer methodology of the CRISP-DM model. The CRISP4BigData methodology (based on CRISP-DM methodology) describes the hierarchical process model, consisting of a set of tasks disposed to the four layers: phase, generic task, specialized task, and process instance.

Within each of these layers are a number of phases (e.g., business understanding, data understanding, data preparation) analogous to the standard description of the original CRISP-DM model, but it is now enhanced by some new phases (e.g., data enrichment, retention, and archiving).

Each of these phases consists of second-level generic tasks. The layer is called generic because it is deliberately general enough to cover all conceivable use cases. The third layer, the specialized task layer, describes how actions, located in the generic tasks, should be processed and how they should differ in different situations. That means, the specialized task layer, for example, handles the way data should be processed, “cleaning numeric values versus cleaning categorical values, [and] whether the problem type [calls for] clustering or predictive modeling” (Chapman et al. 2000).

The fourth layer, the process instance, is a set of records of running actions, decisions, and results of the actual Big Data analysis. The process instance layer is “organized according to the tasks defined at the higher levels, but represents what actually happened in a particular engagement, rather than what happens in general” (Chapman et al. 2000).

The different subset of phases, i.e., business understanding, data understanding, data preparation, and data enrichment, are described in detail here. Some of the subordinate phases are equal to the phases named in CRISP-DM.

Image Credit: Pixabay

The offered BDMcube model shifts from an epistemic view of a cognitive system to a management view in a layer-based reference model. Kaufmann (2016) reported that the offered model “can be seen as a metamodel, where specific BDM models for a company or research process represent specific instances implementing certain aspects of the five layers.” BDMcube is a metamodel, or a model of models. It can be substantiated by deriving specific BDM models from it. Thus, it can be used for the classification and enhancement of existing BDM strategies, and it can provide a frame of reference, a creativity tool, and a project management tool to create, derive, or track new Big Data projects.

BDMcube is based on five layers that serve as a frame of reference for the implementation, operation, and optimization of BDM (Kaufmann 2016). Each of the five layers is described in detail here.

Data Integration defines the collection and combination of data from different sources into a single platform. The layer handles the involved database systems and the interfaces among all data sources with special attention to the system scalability and the common characteristics of Big Data, like the volume, velocity, and variety of data (sources).

Data Analytics describes the transformation of raw data into information via involvement of analytical processes and tools. Therefore, Big Data and analytical and machine learning systems have to operate within a scalable, parallel computing architecture.

Data Interaction is a layer which deals with analytical requirements of users and the results of the data analysis in order to create new organizational knowledge. Furthermore, Kaufmann (2016) reported that “it is important to note that data analysis results are in fact nothing but more data unless users interact with them.”

Data Effectuation describes the usage of data analytics results to create added values to products, services, and the operation of organizations.

Data Intelligence defines the task of knowledge management and knowledge engineering over the whole data life cycle, in order to deal with the ability of the organization to acquire new knowledge and skills. Furthermore, the layer offers a cross-functional, knowledge-driven approach to operate with the knowledge assets which are deployed, distributed, and utilized over all layers of Big Data management.

The following two models provide specific instances of BDMcube utilization in the areas of data visualization and data mining. Each of these models reflects the five aspects of BDMcube in its respective domain of application.

Interaction with big data management Visual Data Views

Big Data analysis is based on different perspectives and intentions. To support management functions in their ability to make sustainable decisions, Big Data analysis specialists fill the gaps between Big Data analysis results for consumers and Big Data technologies. Thus, these specialists need to understand their consumers’ and customers’ intentions as well as having strong technology skills, but they are not the same as developers, because they focus more on potential impacts on the business (Upadhyay and Grant 2013).Deduced from these perspectives and intentions, there are different use cases and related user stereotypes that can be identified for performing Big Data analyses collaboratively within an organization. Users with the highest contextual level, e.g., managers of different hierarchy levels of such organizations, need to interact with visual analysis results for their decision-making processes. On the other hand, users with low contextual levels, like system owners or administrators, need to interact directly with data sources, data streams, or data tables for operating, customizing, or manipulating the systems. Nevertheless, user stereotypes with lower contextual levels are interested in visualization techniques as well, in case these techniques are focused on their lower contextual levels.

Finally, there are user stereotype perspectives in the middle of the excesses, representing the connection between user stereotypes with low or high contextual levels. Given the consequences of these various perspectives and contextual levels, it is important to provide the different user stereotypes with a context-aware system for their individual use cases: “The ‘right’ information, at the ‘right’ time, in the ‘right’ place, in the ‘right’ way, to the ‘right’ person” (Fischer 2012). One could add to this quotation “with the right competences,” or perhaps “with the right user empowerment.”

Big data management with the Visualization Pipeline and Its Transformation Mappings

Information visualization (IVIS) has emerged “from research in human-computer interaction, computer science, graphics, visual design, psychology, and business methods” (Cook et al. 2006). Nevertheless, IVIS can also be viewed as a result of the quest for interchanging ideas and information between humans, keeping with Rainer Kuhlen’s work (2004), because of the absence of a direct interchange. The most precise and common definition of IVIS as “the use of computer-supported, interactive, visual representations of abstract data to amplify cognition” stems from the work of Card et al. (Kuhlen 2004). To simplify the discussion about information visualization systems and to compare and contrast them, Card et al. (as reported by Kuhlen 2004) defined a reference model, illustrated above, for mapping data to visual forms for human perception.In this model, arrows lead from raw data to visual data presentation of the raw data within a cognitive, efficient, IVIS based on a visual structure and its rendering of a view that is easy for humans to perceive and interact with. The arrows in this model indicate a series of data transformations, whereas each arrow might indicate multiple chained transformations. Moreover, additional arrows from the human at the right into the transformations themselves indicate the adjustment of these transformations by user-operated controls supporting the human–computer interaction (Kuhlen 2004). Data transformations map raw data, such as text data, processing information (database tables, emails, feeds, and sensor data into data tables) which define the data with relational descriptions and extended metadata (Beath et al. 2012, Freiknecht 2014). Visual mappings transform data tables into visual structures that combine spatial substrates, marks, and graphical properties. Finally, the capability to view transformations creates views of the visual structures by specifying graphical parameters, such as position, scaling, and clipping (Kuhlen 2004). As Kuhlen (2004) summarized this issue, “Although raw data can be visualized directly, data tables are an important step when the data are abstract, without a direct spatial component.” Therefore, Card et al. (1999) defined the mapping of a data table to a visual structure, i.e., a visual mapping, as the core of a reference model, as it translates the mathematical relations within data tables to graphical properties within visual structures.

Introduction to the IVIS4BigData Reference Model

The hybrid refined and extended IVIS4BigData reference model, an adaptation of the IVIS reference model, in combination with Kaufmann’s BDM reference model covers the new conditions of the present situation with advanced visual interface opportunities for perceiving, managing, and interpreting Big Data analysis results to support insights. Integrated into the underlying reference model for BDM, which illustrates different stages of BDM, the adaptation of the IVIS reference model represents the interactive part of the BDM life cycle.According to Card et al. (1999), arrows which indicate a series of (multiple) data transformations lead from raw data to data presentation to humans. However, instead of collecting raw data from a single data source, multiple data sources can be connected, integrated by means of mediator architectures, and in this way globally managed in data collections inside the data collection, management, and curation layer. The first transformation, which is located in the analytics layer of the underlying BDM model, maps the data from the connected data sources into data structures, which represent the first stage in the interaction and perception layer. The generic term “data structures” also includes the use of modern Big Data storage technologies (e.g., NoSQL, RDBMS, HDFS), instead of using only data tables with relational schemata. The following steps are visual mappings, which transform data tables into visual structures, and view transformations, which create views of the visual structures by specifying graphical parameters such as position, scaling, and clipping; these steps do not differ from the original IVIS reference model. As a consequence, interacting only with analysis results leads not to added value for the optimization of research results or business objectives, for example. Furthermore, no process steps are currently located within the insight and effectuation layer, because added values from this layer are generated instead from knowledge, which is a “function of a particular perspective” (Nonaka and Takeuchi 1995) and is generated within this layer by combining the analysis results with existing knowledge.

The major adaptations are located between the cross-functional knowledge-based support layer and the corresponding layers above. As a consequence, from the various perspectives and contextual levels of Big Data analysis and management user stereotypes, additional arrows lead from the human users on the right to multiple views. These arrows illustrate the interactions between user stereotypes with single-process stages and the adjustments of the respective transformations by user-operated controls to provide the right information, at the right time, in the right place, in the right way to the right person (Fischer 2012), within a context-aware and user-empowering system for individual use

cases. Finally, circulation around all whole layers clarifies that IVIS4BigData is not solely a one-time process, because the results can be used as the input for a new process circulation.

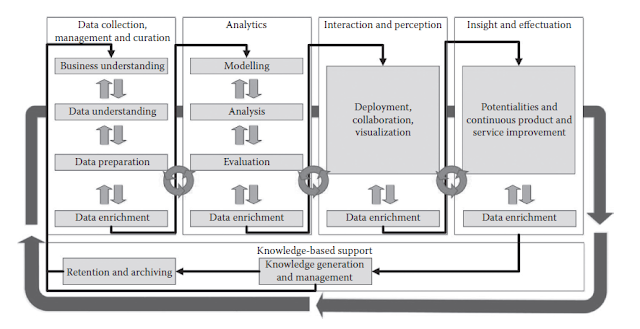

The CRISP4BigData Reference Model

The CRISP4BigData reference model (Berwind et al. 2017) is based on Kaufman’s reference model for BDM (Kaufman 2016) and Bornschlegl et al.’s (2016) IVIS4BigData reference model. IVIS4BigData was evaluated during an AVI 2016 workshop in Bari. The overall scope and goal of the workshop were to achieve a road map that could support acceleration in research, education, and training activities by means of transforming, enriching, and deploying advanced visual user interfaces for managing and using eScience infrastructures in virtual research environments (VREs). In this way, the research, education and training road map pave the way towards establishing an infrastructure for a visual user interface tool suite supporting VRE platforms that can host Big Data analysis and corresponding research activities sharing distributed research resources (i.e., data, tools, and services) by adopting common existing open standards for access, analysis, and visualization. Thereby, this research helps realize a ubiquitous collaborative workspace for researchers which is able to facilitate the research process and potential Big Data analysis applications (Bornschlegl et al. 2016).CRISP4BigData is an enhancement of the classical cross-industry standard process for data mining (CRISP-DM), which was developed by the CRISP-DM Consortium (comprising DaimlerChrysler [later Daimler-Benz], SPSS [later ISL], NCR Systems Engineering Copenhagen, and OHRA Verzekeringen en Bank Groep B.V.) with the target of handling the complexity of data-mining projects (Chapman et al. 2000).

The CRISP4BigData reference model is based on Kaufmann’s five sections of Big Data management, which includes (i) data collection, management, and curation, (ii) analytics, (iii) interaction and perception, (iv) insight and effectuation, and (v) knowledge based support, along with the standard four-layer methodology of the CRISP-DM model. The CRISP4BigData methodology (based on CRISP-DM methodology) describes the hierarchical process model, consisting of a set of tasks disposed to the four layers: phase, generic task, specialized task, and process instance.

Within each of these layers are a number of phases (e.g., business understanding, data understanding, data preparation) analogous to the standard description of the original CRISP-DM model, but it is now enhanced by some new phases (e.g., data enrichment, retention, and archiving).

Each of these phases consists of second-level generic tasks. The layer is called generic because it is deliberately general enough to cover all conceivable use cases. The third layer, the specialized task layer, describes how actions, located in the generic tasks, should be processed and how they should differ in different situations. That means, the specialized task layer, for example, handles the way data should be processed, “cleaning numeric values versus cleaning categorical values, [and] whether the problem type [calls for] clustering or predictive modeling” (Chapman et al. 2000).

The fourth layer, the process instance, is a set of records of running actions, decisions, and results of the actual Big Data analysis. The process instance layer is “organized according to the tasks defined at the higher levels, but represents what actually happened in a particular engagement, rather than what happens in general” (Chapman et al. 2000).

Data Collection, Management, and Curation

The data collection, management, and curation phase is equivalent to the phase of the same name in Bornschlegl et al.’s IVIS4BigData reference model and the data integration phase of the Big Data Management reference model. The phase defines the collection and combination of data from different sources into a single platform. The layer handles the involved database systems and the interfaces to all data sources with special care to the system scalability and the common characteristics of Big Data, like volume, velocity, and the variety of data.The different subset of phases, i.e., business understanding, data understanding, data preparation, and data enrichment, are described in detail here. Some of the subordinate phases are equal to the phases named in CRISP-DM.

Business understanding

The phase business understanding describes the aims of the project to generate a valid project plan for coordination purposes. It is relevant to formulate an exact description of the problem and ensure an adequate task definition. A targeted implementation of purposed analytical methods could generate added value. This contains in parallel the reconcilement analytical processes, relating to the organizational structures and processes of the company. While considering existing parameters, the key parameter of the analytical project should be defined. The following generic tasks have to be processed:- Determination of business objectives

- Assess the initial situation

- Infrastructure and data structure analysis

- Determine analysis goals

- Produce project plan

Within the scope of the analysis of the situation, first the problem is described from an economic point of view and should then be analyzed. Based on this first analysis step, a task definition is derived. Thereby, the parameters that contribute to the achievement of objectives of the project should be recognized and explored. Likewise, it is necessary to list hardware and software resources, financial resources, and the available personnel and their different qualifications. In another subphase, all possible risks should be described, along with all possible solutions for the listed risks. From the economic aim and the analysis of the situation, some analytical aims should be formulated. In another subphase, different types of analytical methods will be assigned to corresponding tasks and success metrics can be formulated, which are reflected in the evaluation of the results of the analysis.

During complex analytical projects, it can be meaningful, based on economic and technical aims, to generate a project plan. The underlying project plan supports the timing coordination of the processes and tasks. Furthermore, within each phase of the analysis, the resources, restrictions, inputs and outputs, and time scales allocated have to be determined. In addition, at the beginning of the project, cost-effectiveness considerations are necessary, accompanied by an assessment of the application possibilities, considering restrictions of human, technical, and financial resources.

Data understanding

The data understanding phase focuses on the collection of the project-relevant internal and external data. The aim of this phase is a better understanding of the underlying data, which will be used during the analysis process. First, insights into the data or database might emerge during the first collection of data. This phase utilizes ad hoc insights through a supporting technique of visualizations or via a method of descriptive statistics.

The following generic tasks deal with the quantity and quality of the available data, and under some circumstances such tasks should be performed a number of times.

A return to the previous phase is always possible; this is especially important if the results of the data understanding phase endanger the whole project. The focus of this phase is the selection and collection of data from different sources and databases. Therefore, it is useful to use so-called metadata, which offer information about the volume or the format of the available data. With an already prepared database, the data warehouse is the first choice of data sources.

Data preparation

The data preparation phase aims to describe the preparation of the data which should be used for the analysis. The phase includes the following generic tasks: define the data set, select data for analysis, enrich data, clean data, construct data, integrate data, and format data. All generic tasks have the aim to change, delete, filter, or reduce the data in order to obtain a high-quality data set for the analysis.

Based on the equivalent aspects of the CRISP4BigData data preparation phase and the CRISP-DM data preparation phase, we can estimate that 80% of resources (time, technology, and personnel) used in the whole CRISP4BigData process are committed to the CRISP4BigData data preparation phase (cf. Gabriel et al. 2011). Furthermore, Gabriel et al. described several additional tasks (e.g., data cleansing and transformation) that should be included within the generic tasks (or in addition to generic tasks).

- Data cleansing: This phase describes all provisions with the aim of high quality data, which is very important for the applicability of the analysis (and techniques) and interpretability of the analysis results. This task extends, for example, to data merging, the multiplicative or additive linking of characteristics.

- Transformation: It is the aim of the transformation phase to change the data into a data format that is usable within the Big Data applications and frameworks. This step also contains formatting of the data into a usable data type and domain. This phase distinguishes alphabetical, alphanumerical, and numerical data types (cf. Gabriel et al. 2011).

Data enrichment

Data enrichment describes “the process of augmenting existing data with newer attributes that offer a tremendous improvement in getting analytical insights from it. Data enrichment often includes engineering the attributes to add additional data fields sourced from external data sources or derived from existing attributes” (Pasupuleti and Purra 2015). In this context, data enrichment is used for the collection and selection of all appreciable process information to reuse the analysis process and analysis results in a new iteration or a new analysis process. In addition, during this phase, information about the analysis stakeholder (e.g., analysts, data scientists, users, requesters) are collected to get insights about, for example, who is using the analysis (process), who is an expert in a needed domain, and which resources (e.g., analysis, diagrams, and algorithms) are reusable.

Analytics

Modeling

The modeling phase describes the design of a Big Data analysis model which matches the requirements and business objectives with the aim of a high significance of the analysis. Within this phase, suitable frameworks, libraries, and algorithms will be selected to analyze the underlying data set based on the business objectives.

Attention should be paid, in some circumstances, that “not all tools and techniques are applicable to each and every task. For certain problems, only some techniques are appropriate … “Political requirements” and other constraints further limit the choices available to the data … engineer. It may be that only one tool or technique is available to solve the problem at hand—and that the tool may not be absolutely the best, from a technical standpoinit” (Chapman et al. 2000).

It is also necessary to define a suitable test design which contains a procedure to validate the model’s quality. The test design should also contain a plan for the training, testing, and evaluation of the model. Further, it must be decided how the available data set should be divided into a training data set, test data set, and evaluation test set.

Analysis

This phase describes the assessment of the analysis (model and analysis results); therefore, it is necessary to ensure that the analysis is matching the success criteria. Further, it is necessary to validate the analysis based on the test design and the business objectives concerning validity and accuracy. Within this phase the following activities should be processed to obtain a suitable and meaningful model, analysis, and results (cf. Chapman et al. 2000):

- Evaluate and test the results with respect to evaluation criteria and according to a test strategy

- Compare the evaluation results and interpretation to select the best model and model parameter

- Check the plausibility of model in the context of the business objectives

- Check the model, analysis, and results against the available knowledge base to see if the discovered information is useful

- Check why certain frameworks, libraries, and algorithms and certain parameter settings lead to good or bad results

Evaluation

The evaluation phase describes the comprehensive assessment of the project progression, with the main focus on the assessment of the analysis results and the assessment of the whole CRISP4BigData process (cf. Gabriel et al. 2011).

Within the evaluation phase the analysis process and analysis results must be verified by the following criteria: significance, novelty, usefulness, accuracy, generality, and comprehensibility (Chapman et al. 2000, Gabriel et al. 2011). Further, all results must match the requirements and project aims (Gabriel et al. 2011). Following this, the CRISP4BigData process and data model should be audited concerning the process quality to reveal weak points and to design and implement improvements. Furthermore, the process should include the model test in a software test environment based on real use cases (or real applications) (Chapman et al. 2000). Finally, “depending on the results of the assessment and the process review, the project team decides how to proceed … to finish this project and move on to deployment” (Chapman et al. 2000).

Data enrichment

The data enrichment phase is equal to the previously named subordinate data enrichment phase within the data collection, management, and curation phase.

Interaction and Perception

Deployment, Collaboration, and Visualization

The deployment, collaboration, and visualization phase describes how the CRISP4BigData process and the process analysis should be used. The following points are examples of information that should be described in detail and implemented within this phase:

- Durability of the process, analysis, and visualizations

- Grant access privileges for collaboration and access to results and visualizations

- Planning of sustainable monitoring and maintenance for the analysis process

- Presentation of final report and final project presentation

- Implementation of a service and service-level management

This phase also contains the creation of all needed documents (e.g., operational documents, interface designs, service-level management documents, mapping designs, etc.).

Data Enrichment

This data enrichment phase is equivalent to the above-described subordinate data enrichment phase within the data collection, management, and curation phase.

Insight and Effectuation

Potentialities and Continuous Product and Service Improvement

The phase for potentialities and continuous product and service improvement entails the application of the analysis data to accomplish added value for products and services, as well as to optimize the organization and organizational processes. According to the Big Data Management Reference model, the creation of added value is based on an efficiency increase in production and distribution (Kaufmann 2016), the optimization of machine operations (Kaufmann 2016), and improvement of the CRM (Kaufmann 2016).

Data Enrichment

This third data enrichment phase is also equal to the above described subordinate data enrichment phase within the data collection, management, and curation phase.

Knowledge-Based Support

Knowledge Generation and Management

The knowledge generation and management phase entails the handling of the collected (process) information, collected information about the experts and users, and the created insights and ensuing fields of application, with the aim to collect these artifacts. These artifacts can be connected in a meaningful way to create a knowledge tank (knowledge base) and an expert tank, both of which can be applied for all steps of the data life cycle (Kaufman 2016).

Retention and Archiving

The retention and archiving phase entails the retention and archiving of the collected data, information, and results during the whole CRISP4BigData process. This phase contains the following Generic Tasks and Outputs. he collected data can then be classified according to the Hortonworks (2016) classification model, as follows:

- Hot: Used for both storage and computing. Data that are being used for processing stay in this category.

- Warm: Partially hot and partially cold. When a block is warm, the first replica is stored on disk and the remaining replicas are stored in an archive.

- Cold: Used only for storage, with limited computing. Data that are no longer being used, or data that need to be archived, are moved from hot storage to cold storage. When a block is cold, all replicas are stored in the archive.

All the collected, archived, and classified data can be used as “new” databases for a new iteration of the CRISP4BigData process.

Preparatory Operations for Evaluation of the CRISP4BigData

Reference Model within a Cloud-Based Hadoop Ecosystem

The offered CRISP4BigData Reference Model, which supports scientists and research facilities to maintain and manage their research resources, is currently evaluated in a proof of concept implementation. The implementation is based on the conceptual architecture for a VRE infrastructure called the Virtual Environment for Research Interdisciplinary Exchange (unpublished article of Bornschlegl), for which the goal is to handle the whole analytical process, managing (automatically) all the needed information, analytical models, algorithms, library knowledge resources, and infrastructure resources to obtain new insights and optimize products and services.

Matthias Schneider (2017) provided in his thesis, as a preparatory operation, a prototypical implementation for executing analytic Big Data workflows using selected components based on a Cloud-based Hadoop ecosystem within the EGI Federated Cloud (Voronov 2017). The selection of components was based on requirements for engineering VRE. Its overall architecture is presented below, followed by two diverse domain-specific use cases implemented in this environment. The use cases are connected to the EU cofounded SenseCare project, which “aims to provide a new effective computing platform based on an information and knowledge ecosystem providing software services applied to the care of people with dementia” (Engel et. al 2016).

Architecture Instantiation

Apache Ambari is used as an overall Hadoop cluster management tool, and it is extendable via use of Ambari Management Packs for service deployment. Hue is a user-friendly interface that allows the analyst to model work flows and access the HDFS. These actions are executed by Oozie using MapReduce or Spark operating on YARN, with files stored in HDFS or HBase tables. Sqoop can be used for data management, and Sentry can be used for authorization.

Use Case 1: MetaMap Annotation of Biomedical Publications via Hadoop

As a part of the SenseCare Project, information retrieval for medical research data, supported by algorithms for natural language processing, was evaluated. One approach would be to use MetaMap, a tool developed at the U.S. National Library of Medicine to facilitate text analysis on biomedical texts, in order to extract medical terms from a comprehensive database of a vast volume of medical publications. In order to process this Big Data using Hadoop, the MetaMap server was embedded into an Ambari Management Pack to distribute it on the nodes of the Hadoop cluster. Using MapReduce as the execution framework, a map method was developed to perform the actual processing of the input files from the database, which yielded the extracted medical terms as result, while a reducer method filtered duplicates of these terms before writing them into the distributed file system.

Use Case 2: Emotion Recognition in Video Frames with Hadoop

While Hadoop is often used for textual processing, Use Case 2 evaluated the processing of binary data. Here, video data was evaluated, with the goal of recognizing emotions from facial expressions, via computer vision algorithms. To avoid handling of multiple video formats in Hadoop, the video files were preprocessed and partial frames were extracted to images. These images were stored in a special container format that allowed division into chunks when stored in HDFS, in order to achieve distributed processing using MapReduce. The actual processing was realized via a mapper method called OpenCV, a library of extracted facial landmarks, among other computer vision functions. Facial landmarks are distinctive spots in the human face, e.g., the corner of one’s mouth, or the position of the nose or the eyes. Emotions are derived from the relative positions of these facial landmarks. In subsequent tasks, these results can be rated and analyzed.

Hadoop Cluster Installation in the EGI Federated Cloud

A cluster installation using fixed hardware is rigid in terms of its resource utilization. Therefore, Cloud computing approaches are gaining in popularity, because they allow a more balanced usage of hardware resources through virtualization. Especially when instantiating VREs, a cloud environment is advantageous, as it allows maximum flexibility concerning computational and storage resources. In order to gather experience with a Cloud environment for the Hadoop VRE introduced above, the EGI Federated Cloud was evaluated in Andrei Voronov’s thesis (Voronov 2017).

MetaMap Annotation Results

As a first benchmark, the annotation process for biomedical publications using MetaMap (Use Case 1) was executed in a local cluster with two nodes and compared to the execution in a cluster within the EGI CESNET-MetaCloud, a provider of EGI, with five nodes. One thousand biomedical full-text publications were processed in the local cluster within six hours, while the EGI Federated Cloud needed as long as 15 hours for the same tasks.

A remarkable result was that the execution times in the EGI Federated Cloud varied massively (13 to 16 hours), while the execution time on the local cluster remained quite constant. This illustrates the shared character of the Cloud environment provided by the EGI. During the evaluation phase and multiple executions of this long-lasting batch-oriented process, no down time occurred. Thus, even with no guaranteed resources and varying computational power, the EGI Federated Cloud seems to be a reliable service.

COMMENTS