Before proceeding to our discussion of big data hadoop ecosystem, it is important to know that Hadoop and data warehouses serve different p...

Before proceeding to our discussion of big data hadoop ecosystem, it is important to know that Hadoop and data warehouses serve different purposes. Both of them have different business intelligence (BI) strategies and tools. The basic difference between the classic BI and the Big Data Business Intelligence tools is associated with the requirement aspect. In the case of classic BI, there is a clarity of needs and requirements (Ronquillo 2014). Big Data BI tends to capture everything and lacks this requirement for clarity. A data warehouse is only associated with structured data, while Big Data incudes all data.

Image Credit: pixabay

Image Credit: pixabay

What Is Hadoop ecosystem?

Hadoop is an open source project of Apache Foundation. It is a framework written in Java that was developed by Doug Cutting, who named it after his son’s toy elephant. This is one of the most effective and famous solutions to handle massive quantities of data, which can be structured, unstructured, or semistructured, using commodity hardware. Hadoop makes use of Google’s MapReduce technology as its foundation. Many have a misconception that Hadoop is a replacement for relational database systems. Structured data act like basic support and building blocks for most of companies and enterprises. This is because structured data are easier to handle and process than unstructured and semistructured data. Hadoop is not a replacement for the traditional relational database system, as it is not suitable for online transaction processing workloads, where data are randomly accessed on structured data. In such cases, relational database systems can be quite handy. Hadoop is known for its parallel processing power, which offers great performance potential.How Does Hadoop Ecosystem Tackle Big Data Challenges?

There are three key trends that are changing the way the entire industry stores and uses data (Olson 2010). These trends are:- Instead of having a large and centralized single server to accomplish the tasks, most organizations are now relying on commodity hardware, i.e., there has been a shift to scalable, elastic computing infrastructures.

- Because of proliferating data at various IT giants, organizations, and enterprises, there has been an eventual rise in the complexity of data in various formats.

- Combinations of two or more data sets may reveal valuable insights that may not have been revealed by a single data set alone.

These trends make Hadoop a major platform for data-driven companies. We’ve seen many challenges in Big Data analytics. Now, let us understand how Hadoop ecosystem can help us overcome these challenges.

Storage Problem

As Hadoop is designed to run on a cluster of machines, desired storage and computational power can be achieved by adding or removing nodes for a Hadoop cluster. This reduces the need to depend on powerful and expensive hardware, as Hadoop relies on commodity hardware. This solves the problem of storage in most cases.

Various Data Formats

We’ve seen that data can be structured, semistructured, or unstructured. Hadoop doesn’t enforce any schema on the data being stored. It can handle any arbitrary text. Hadoop can even digest binary data with a great ease.

Processing the Sheer Volume of Data

Traditional methods of data processing involve separate clusters for storage and processing. This strategy involves rapid movement of data between the two categories of clusters. However, this approach can’t be used to handle Big Data. Hadoop clusters are able to manage both storage and processing of data. So, the rapid movement of data is reduced in the system, thus improving the throughput and performance.

Cost Issues

Storing Big Data in traditional storage can be very expensive. As Hadoop is built around commodity hardware, it can provide desirable storage at a reasonable cost.

Capturing the Data

In response to this challenge, many enterprises are now able to capture and store all the data that are generated every day. The surplus availability of updated data allows complex analysis, which in turn can provide us with desired valuable insights.

Durability Problem

As the volume of data stored increases every day, companies generally purge old data. With Hadoop, it is possible to store the data for a considerable amount of time. Historical data can be a key factor while analyzing data.

Scalability Issues

Along with distributed storage, Hadoop also provides distributed processing. Handling large volumes of data now becomes an easy task. Hadoop can crunch a massive volume of data in parallel.

Issues in Analyzing Big Data

Hadoop provides us with rich analytics. There are various tools, like Pig and Hive, that make the task of analyzing Big Data an easy one. BI tools can be used for complex analysis to extract the hidden insights.

Before we proceed to components of Hadoop, let us review its basic terminology.

- Node: A node simply means a computer. This is generally a nonenterprise commodity hardware that contains data.

- Rack: A rack is a collection of 30 to 40 nodes that are physically stored close to each other and are all connected to the same network switch. Network bandwidth between two nodes on the same rack is greater than bandwidth between two nodes on different racks.

- Cluster: A collection of racks can be termed a cluster.

The two main components of Hadoop are HDFS and MapReduce. By using these components, Hadoop users have the potential to overcome the challenges posed by Big Data analytics. Let us discuss each component.

HDFS

HDFS (Hadoop distributed file system) is designed to run on commodity hardware. The differences from other distributed file systems are significant. Before proceeding to the architecture, let us focus on the assumptions and core architectural goals associated with HDFS (Borthakur 2008).

1. Detection of faults

An HDFS instance may be associated with numerous server machines, each storing part of the file system’s data. Since there are huge numbers of components, each component has a nontrivial probability of failure, implying that some component of HDFS is always nonfunctional. Detection of such faults and failures followed by automatic recovery is a core architectural goal of HDFS.

2. Streaming data access

Applications that run across HDFS need streaming data access to their data sets. HDFS is designed for batch processing rather than interactive use. The priority is given to throughput of data access over low latency of data access.

3. Large data sets

HDFS is designed to support large data sets. A typical file in HDFS is gigabytes to terabytes in size. HDFS should provide high data bandwidth to support hundreds of nodes in a single cluster.

4. Simple coherency model

HDFS applications need a write-once/read-many access model for files. This assumption minimizes data coherency (consistency) issues and enables highthroughput data access.

5. Moving computation

The computation requested by an application is very efficient if it is executed near the data on which it operates. Instead of migrating the data to where the application is running, migrating the computation minimizes network congestion, and overall throughput of the system is maximized. HDFS provides the required interfaces to the applications to achieve the same.

6. Portability

HDFS supports easy portability from one platform to another.

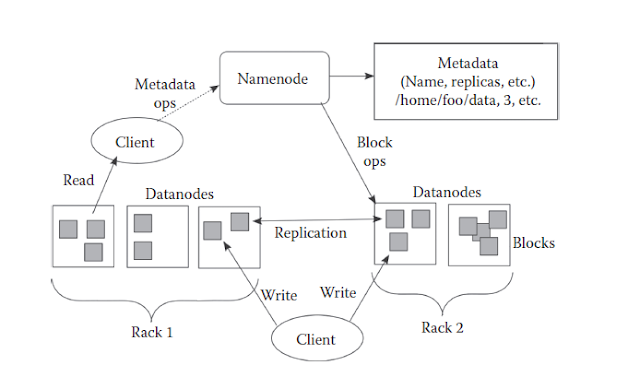

Hadoop Architecture

HDFS has a master–slave architecture. It is mainly comprised of name and data nodes. These nodes are pieces of software designed to run on commodity machines. An HDFS cluster contains a single name node, a master server that manages file system name space and regulates access to files by clients. Name node acts as a repository for all HDFS metadata. Generally, there is a dedicated machine that only runs the name node software. There can be numerous data nodes (generally one per node in a cluster) which manage storage attached to the nodes that they run on. HDFS allows the data to be stored in files. These files are further split into blocks, and these blocks are stored in a set of data nodes. We can see that the name node is responsible for mapping of these blocks to data nodes. We can also infer that data nodes are responsible for serving read and write requests from clients. Data nodes also perform block creation, deletion, and replication upon the instruction of name node.

HDFS is designed to tolerate a high component failure rate through replication of data. HDFS software can detect and compensate any hardware-related issues, such as disk or server failures. As files are further decomposed into blocks, each block is written in multiple servers (generally three servers). This type of replication ensures performance and fault tolerance in most cases. Since the data are replicated, any loss of data in a server can be recovered. A given block can be read from several servers, thus leading to increased system throughput. HDFS is best suited to work with large files. The larger the file, the less time Hadoop spends seeking the next data location on the disk. Seek steps are generally costly operations that are useful when there is a need to analyze or read a subset of a given data set. Since Hadoop is designed to handle the sheer volume of data sets, the number of seeks is reduced to a minimum by using large files. Hadoop is based on sequential data access rather than random access, as sequential access involves fewer seeks. HDFS also ensures data availability by continually monitoring the servers in a cluster and the blocks they manage. When a block is read, its check-sum is verified, and in case of damage, it can easily be restored from its replicas.

MapReduce

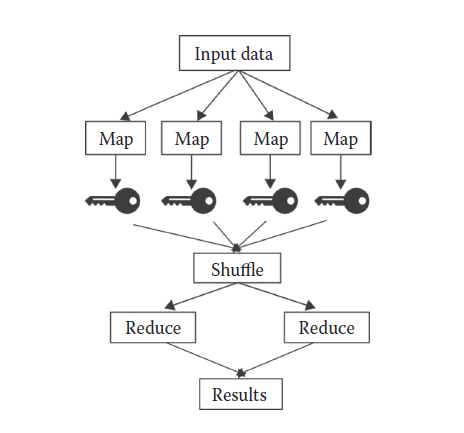

MapReduce is a framework that is designed to process parallelizable problems across huge data sets by making use of parallel data-processing techniques that typically involve large numbers of nodes. The term MapReduce refers to two separate and distinct tasks that Hadoop programs perform. We can infer that the Map job takes a set of data and converts it into another set of data where individual elements are broken down into tuples (key/value pairs). This is followed by the Shuffle operation. This operation involves the transfer of data from Map tasks to the nodes, where Reduce tasks will run. Output of Map job is given as input to the Reduce job, where the tuples are broken down to even smaller sets of tuples. As implied by the term MapReduce, Reduce job is always performed after Map job. Map and Reduce jobs run in parallel with each other. Map Reduce also excels at exhaustive processing (Olson 2010). If an algorithm requires examination of each and every single record in a file in order to obtain a result, MapReduce is the best choice. The key advantage of MapReduce is that it can process petabytes of data in a reasonable time to answer a question. A user may have to wait for some minutes or hours, but is finally provided with answers to all those questions that would’ve been impossible to answer without MapReduce.

As we’ve briefly seen MapReduce, let us understand its execution. The MapReduce program executes in three stages, namely, Map stage, Shuffle stage, and Reduce stage.

1. Map stage

The goal of this stage is to process input data. Generally, input data are in the form of a file or directory which resides in HDFS. When the input is passed to the Map function, several small chunks of data are produced as the result of processing.

2. Reduce stage

The Reduce stage is a combination of the Shuffle stage and Reduce stage. Here, the output of the Map function is further processed to create a new set of output. The new output will then be stored in HDFS.

Hadoop: Pros and Cons

Talking of Hadoop, there are numerous perks that one should know. Some of the important perks are:

- Hadoop is an open source framework

- Its ability to schedule numerous jobs in smaller chunks

- Support for replication and failure recovery without human intervention in most cases

- One can construct MapReduce programs that allow exploratory analysis of data as per the requirements of an organization or enterprise.

Although Hadoop has proven to be an efficient solution against many challenges posed by Big Data analytics, it has got a few drawbacks. It is not suitable under the following conditions:

- To process transactions due to the lack of random access

- When work cannot be parallelized

- To process huge sets of small files

- To perform intensive calculation with limited data

Other Big Data-Related Projects

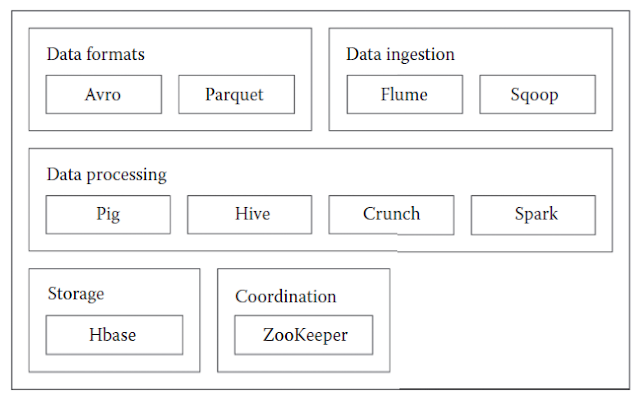

Hadoop and HDFS are not restricted to MapReduce. In this section, we give a brief overview of the technologies which use Hadoop and HDFS as the base. Here, we divided the most prominent Hadoop-related frameworks and classified them based on their applications.

Data Formats

Apache Avro

Apache Avro is a language-neutral data serialization system which was created by Doug Cutting (the creator of Hadoop) to address the considerable drawback associated with Hadoop: its lack of language portability. Avro provides rich data structures; a compact, fast, binary data format; a container file, to store persistent data; a remote procedure call (RPC); and simple integration with dynamic languages. Having a single data format that can be processed by several languages, like C, C++, Java, and PHP, makes it very easy to share data sets with an increasingly larger targeted audience. Unlike other systems that provide similar functionality, such as Thrift, Protocol Buffers, etc., Avro provides the following:

1. Dynamic Typing

Avro does not require code to be generated. A schema is always provided with the data which permits full processing of that data without code generation, static data types, etc.

2. Untagged data

Since the schema is provided with the data, considerably less type information is encoded with the data, resulting in smaller serialization size.

3. No manually assigned field ID

With every schema change, both the old and new schema are always present when processing data, so differences may be resolved symbolically, using field names.

Avro relies on schemas. As data are read in Avro, the schema used when writing it is always present. This permits each datum to be written with no per-value overheads, making serialization both fast and small. Avro schemas are usually written in JSON, and data are generally encoded in binary format.

Apache Parquet

Apache Parquet is a columnar storage format known for its ability to store data with a deeply nested structure in true columnar fashion, and it was created to make the advantages of compressed, efficient columnar data representation available to any project in the Hadoop ecosystem. Parquet uses the record shredding and assembly algorithm described in the Dremel paper. Columnar formats allow greater efficiency in terms of both file size and query performance. Since the data are in a columnar format, with the values from one column are stored next to each other, the encoding and compression become very efficient. File sizes are usually smaller than row-oriented equivalents due to the same reason (White 2012). Parquet allows for reading nested fields independent of other fields, resulting in a significant improvement in performance.

Data Ingestion

Apache Flume

Flume is a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of log data. Generally, it is assumed that data are already in HDFS or can be copied there in bulk at once. But many systems don’t meet these assumptions. These systems often produce streams of real-time data that one would like to analyze, store, and aggregate using Hadoop, for which Apache Flume is an ideal solution. Flume allows ingestion of massive volume of event-based data into Hadoop. The usual destination is HDFS. Flume has a simple and flexible architecture based on streaming data flows. It is robust and fault tolerant with tunable reliability mechanisms and many failover and recovery mechanisms. It uses a simple extensible data model that allows for online analytic application.

Apache Sqoop

There is often a need to access the data in storage repositories outside of HDFS for which MapReduce programs need to use external APIs. In any organization, valuable data are stored in structured format, making use of relational database management systems. One can use Apache Sqoop to import data from a relational database management system (RDBMS), such as MySQL or Oracle or a mainframe, into the HDFS, transform the data in Hadoop MapReduce, and then export the data back into an RDBMS. Sqoop automates most of this process, relying on the database to describe the schema for the data to be imported. Sqoop uses MapReduce to import and export the data, which provides parallel operation as well as fault tolerance.

Data Processing

Apache Pig

Apache Pig is a platform for analyzing large data sets that consists of a high-level language for expressing data analysis programs, coupled with an infrastructure for evaluating these programs. The main property of Pig programs is that their structure is compliant for substantial parallelization, which in turn enables them to handle huge data sets. These data structures allow application of powerful transformations of the data. Pig allows the programmer to concentrate on the data as the program takes care of the execution procedure. It also provides the user with optimization opportunities and extensibility.

Apache Hive

Hive is a framework that grew from a need to manage and learn from the massive volumes of data that Facebook was producing every day (White 2012). Apache Hadoop is a data warehouse platform that provides reading, writing, and managing of large data sets in distributed storage using SQL. Hive has made it possible for analysts to run queries on huge volumes of Facebook data stored in HDFS. Today, Hive is used by numerous enterprises as a general-purpose data-processing platform. It comes with (built-in) Apache Parquet and Apache ORC.

Apache Crunch

Apache Crunch is a set of APIs which are modeled after FlumeJava (a library that Google uses for building data pipelines on top of their own implementation of MapReduce) and is used to simplify the process of creating data pipelines on top of Hadoop. The main perks over plain MapReduce are its focus on programmer-friendly Java types, richer sets of data transformation operations, and multistage pipelines.

Apache Spark

Apache Spark is a fast and general-purpose cluster computing framework for large-scale data processing. It was originally developed at the University of California, Berkeley’s AMPLab but was later donated to Apache. Instead of using MapReduce as an execution engine, it has its own distributed runtime for executing work on cluster (White 2012). It has an ability to hold large data sets in memory between jobs. Spark’s processing model best suits iterative algorithms and interactive analysis.

Storage

HBase

Apache Hbase is an open source, distributed, versioned, nonrelational database modeled after Google’s Bigtable for HDFS; it allows real-time read/write random access to very large data sets. It has the capacity to host very large tables on clusters made from commodity hardware.

Coordination

ZooKeeper

ZooKeeper was created as Hadoop’s answer to developing and maintaining an open source server which could enable a highly reliable distributed coordination service. It can be defined as a centralized service for maintaining configuration information, namely, providing distributed synchronization and providing group services. These services are implemented by ZooKeeper so that the applications do not need to implement them on their own.

Hadoop has many more applications, including Apache Mahout for Machine Learning and Apache Storm, Flink, and Spark to complement real-time distributed systems. As of 2009, application areas of Hadoop included log data analysis, marketing analysis, machine learning, sophisticated data mining, image processing, processing of XML messages, web crawling, text processing, general archiving of data, etc.

COMMENTS